If the library community aims to preserve the digital collections created through LSDIs, a crucial preliminary step will be to assess the community’s readiness to assume such a role. Several efforts have been made in the past decade to develop standards and best practices that could provide a technical and organizational framework for managing digital preservation activities. A comprehensive discussion of this topic is beyond the scope of this paper. This section highlights some of the key components of a preservation program for digitized content.

Digital preservation within the context of LSDIs is a multifaceted topic. Two definitions are key to activity:

- Digitizing refers to the process of converting analog materials into digital form. If users access the digital copies instead of the analog originals (thus minimizing handling of the originals), the digital copies may be considered to have performed a preservation function. They can also perform a preservation function by serving as backups. In some cases, digital reformatting is guided by established best practices and technical specifications to ensure that the materials are being converted at a level of quality that will endure and will support future users’ needs.

- Preserving digital objects entails the preservation of digitized materials, including those resulting from the reformatting process, to ensure their longevity and usability. In the context of this paper, the digital objects preserved may be the products of preservation reformatting or of digitization efforts in support of other purposes, such as creating a digital copy in support of online access.

The framework laid out in this section weaves through these two distinct but interrelated domains.

3.1 Selection for Digitization and Preservation Reformatting

Selection and curation decisions were prominent features of early digitization initiatives. Decisions about what to digitize were influenced by traditional preservation reformatting technologies and they favored public domain materials that had enduring value for scholarship.29 This approach was driven by a need to invest limited funds in unique aspects of institutional collections and by an interest in identifying core literature in support of research and pedagogy. Preservation of rare and brittle materials received priority; however, because early digitization technologies required that books be disbound before they could be scanned, the potential for damage to originals was a critical factor in selection decisions.

Early digitization initiatives generated lively discussion in the library community about the role of digitized content from access and preservation perspectives. Some librarians, who believed that digital files should be used as preservation master copies, expressed their commitment to preserving digital surrogates, even while acknowledging that reformatting cannot capture all characteristics inherent in the original. Some librarians believed that selection decisions should be based strictly on the need to provide access. Still other librarians felt that digital surrogates should not be considered as substitutes for the originals, but that they should still be of the highest-possible quality.

In 2004, the Association of Research Libraries (ARL) endorsed digitization as an accepted reformatting option and stated that the choice was not prescriptive and remained a local decision.30 ARL encouraged those engaged in digital reformatting to make an organizational and financial commitment to adhere to best practices and standards.

The results of these early digitization efforts, which emphasized curatorial decisions and image quality, can be characterized as “boutique collections.” Because of the magnitude of today’s LSDIs, such selection criteria have largely been pushed aside. Nevertheless, the new generation of digitization projects begs the following questions in regard to selection for digitization and preservation:

- Should we commit to preserve all the digital materials created through the LSDIs, implement a selection process to identify what needs to be preserved, or assign levels of archival efforts that match use level? According to a widely cited statistic, 20 percent of a collection accounts for 80 percent of its circulation. A multiyear OCLC study of English-language book circulation at two research libraries revealed that about 10 percent of books accounted for about 90 percent of circulation.31 An analysis of circulation records for materials chosen for Cornell University Library’s Microsoft initiative showed that 78 percent to 90 percent of those items had not circulated in the last 17 years.

In Cornell’s case, the circulation frequency may be lower than average because of the age of the materials sampled: all were published before 1923. Nevertheless, the findings support the general perception that many of the materials covered by LSDIs are seldom used. Because selection for preservation can be time-consuming and expensive, the trend will likely be to preserve everything for “just-in-case” use. However, economic realities necessitate careful consideration of how much to invest in preserving unused content. This quandary is explored in Section 5.9. - Will electronic access spur new demand for materials seldom used in print? Libraries contain deep and rich collections. However, users are often hampered in locating and obtaining materials of interest because institutions use different library management systems, with varying discovery and retrieval mechanisms. Anderson argues that the 80/20 rule exists in the physical world because we chop off the “long tail”; in other words, the physical inaccessibility of an out-of-print or obscure work limits the demand for it.32 On the basis of this argument, Dempsey makes a compelling case for aggregating supply and demand at the network level rather than at the level of individual libraries.33 Pooling the resources of many institutions’ collections through LSDI partnerships, it is assumed, will find users for materials that have never been checked out.

- Will LSDIs’ use of high-speed, automated digitizing processes disenfranchise materials needing special handling? Most of the current LSDIs exclude special collections, which comprise rare or valuable materials including books, manuscripts, ephemera and realia, personal and professional papers, photographs, maps, fine art, audiovisual materials, and other unique documents and records.34 Such materials require special handling because of their scarcity, age, physical condition, monetary value, or security requirements; consequently, they have high digitization costs. Early digitization efforts often included funds and services to prepare special and rare materials for digitization, including such activities as conservation treatment, repair or replacement of fragile pages, and rebinding. Such processes are difficult to accommodate in a large-scale initiative, and converting rare and special materials significantly slows the overall digitization project. Are these valuable collections being disenfranchised because of the emphasis on digitizing what can be processed quickly? Is there a danger that LSDI libraries will devote such a big share of their resources to large-scale efforts that little will remain to digitize special collections? Responding to such concerns, RLG Programs is trying to raise awareness in the special collections community of the implications of digitizing only widely held material.35

- Is there a means for recording gaps in collections and within publications? Selection decisions for the current LSDIs are influenced by limitations of current digitization technologies as well as by the interests of the commercial partners. Improved bound-volume digitization technologies have greatly expanded the types of materials that can be digitized. However, current equipment still limits the books that can be digitized based on size (height, width, and length), condition, binding style, and paper type. The Google Five, the five pioneering libraries that signed on first with Google, report that some books are excluded because of fragile condition or problems with binding.36 Some materials are excluded because they lack bar codes. (Although there is an established process for bar coding, some libraries avoid this process to simplify the workflow.) In addition, book sections that would require special treatment, such as maps and foldouts, often cannot be accommodated in a high-speed digitization process; such books are digitized with portions missing or not digitized at all. Incompleteness caused by missing sections has serious implications for the authenticity of digitized content. This aspect of LSDIs raises questions about tracking mechanisms for recording omitted materials and plans for adding them to the digitized corpus. Information on how LSDI survey respondents handle this issue is provided in the Appendix.

- How much duplication should there be in selection and digitization efforts? In 2005, Lavoie et al. used the WorldCat union catalog to analyze book collections of the five libraries then participating in the Google Print for Libraries project.37 After duplicate holdings across the five institutions were removed, the Google libraries together held 10.5 million unique print books out of the 32 million in WorldCat. Of those titles, 39 percent were held by at least two of the five libraries. This suggests that four out of every ten digitized books may be redundant (assuming that digitization of titles rather than manifestations was the project goal).38 On the basis of these preliminary data, the authors questioned the degree of redundancy associated with the digitization efforts and identified potential duplication as an area for further study.

There is a possibility for duplication both within a specific project (i.e., the same material being digitized by more than one library participating in a Microsoft initiative) and among different initiatives (i.e., the same materials being digitized both by Google and Microsoft). As these initiatives expand, the need for comprehensive collection analysis becomes more pressing. While preservation specialists acknowledge that redundancy is important for securing digital content over time, the type of redundancy that results from these approaches appears opportunistic and hence underlines the need for collections analysis across projects. It is telling that Google advertised in March 2007 for a library collections specialist to analyze the collections scanned to date and to help Google develop new library relationships with the goal of digitizing the world’s books.39

Redundancy concerns bring registry-development efforts once again to the fore. The DLF/OCLC Registry of Digital Masters (RDM) was conceptualized in 2001 to provide a central place for libraries to search for digitally preserved materials.40 By registering digitized objects with the RDM, a library indicates that it is committed to preserving digitized collections. One of the benefits of the registry is the assurance that one institution may not need to digitize certain materials if they are already in the registry-therefore saving resources. The potential role and current status of the registry are discussed in greater detail in Section 5.7. - What legal rights do participating libraries have to preserve in-copyright content digitized through LSDIs? Google’s decision to include copyright-protected materials in its initiative has been the subject of much discussion as well as of a legal challenge. Some partners, such as the University of California, the University of Virginia, and the University of Michigan, opted to make all their collections that fit the requirements available to Google.41 Others, including Harvard, Oxford, and Princeton Universities, decided to limit their participation to public domain content. An analysis by Brian Lavoie of OCLC found that 80 percent of the original five Google libraries’ materials were still in copyright.42 This aspect of the LSDIs raises the issue of the participating libraries’ legal rights to preserve copyrighted content digitized through LSDIs. For example, is it legally permissible for a library to rescan originals that are not in the public domain to replace unusable or corrupted digital objects? What are the copyright implications of migrating a digital version of materials in copyright from TIFF to JPEG2000 file format?

Section 108 of the U.S. Copyright Law articulates the rights to and limitations on reproduction by libraries and archives;43 however, the right to take action to preserve digitized content that is copyright protected is still under study by the Section 108 Study Group convened by the Library of Congress.44 The Study Group is charged with updating the Copyright Act’s balance between the rights of creators and copyright owners and the needs of libraries and archives within the digital realm. The group is also reexamining the exceptions and limitations applicable to digital preservation activities of libraries and archives.45

When the CIC libraries joined the Google Initiative, they decided to archive only materials in the public domain and opted not to receive digital copies of materials in copyright until a general preservation exception (the right to preserve materials in copyright) is added to Section 108.46

3.2 Content Creation

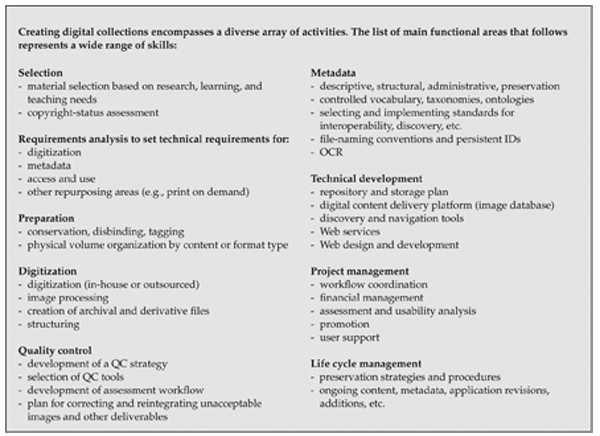

Digital preservation requires a sequence of decisions and actions that begin early in an information object’s life cycle. Standard policies and operating principles for digital content creation are the foundation of a successful preservation program. Table 2 summarizes the activities involved in creating digital collections. These guidelines apply to digitization initiatives with preservation as an explicit mandate. Most of the current LSDIs do not fall within that category.

The purpose of this section is to inform discussions of the differences between access- and preservation-driven reformatting. It reviews the following aspects of content creation, all of which are critical in producing high-quality collections:

- technical specifications for image-quality parameters for master and archival files

- requisite preservation metadata with descriptive, administrative, structural, and technical information to enhance access as appropriate, enable content management, and facilitate discovery and interoperability

- quality-control protocols for digital images and associated data

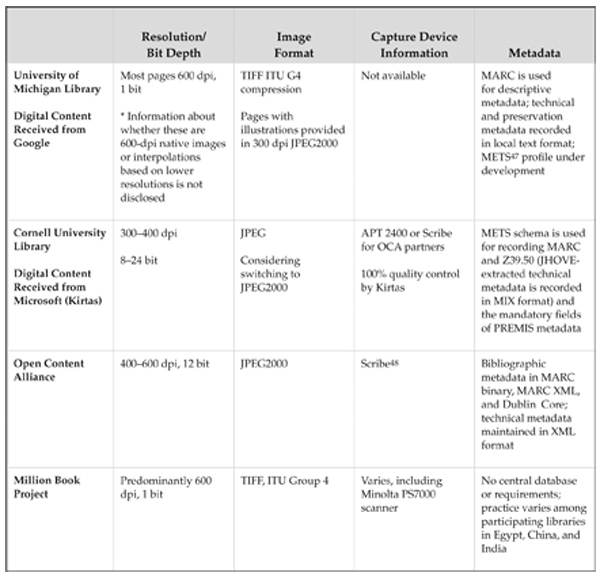

Table 3 provides examples of digitization specifications used in different initiatives for digital copies received by libraries, including resolution, bit depth, image format, capture device information, and metadata standards supported. Additional content-creation information from sample LSDI libraries, including quality control parameters, is provided in the Appendix.

|

Table 2. Framework for a Digitization Project

|

Table 3: Examples of Digitization Specifications Used in Different Initiatives for Digital Copies Received by Libraries

3.2.1 Image-Quality Procedures for Large-Scale Digitization Initiatives

LSDIs use a variety of quality parameters that are often linked to the access requirements of the hosting companies and to the capabilities of the digitization equipment and applications. There is active debate about what is acceptable and whether current capture quality will support future viewing and processing needs.49 Because of nondisclosure agreements and varying practices based on proprietary digitization configurations, it is hard to obtain information on LSDIs’ current digitization and metadata specifications. Some examples gathered as a result of the LSDI preservation survey are provided in the Appendix. Most of the participating libraries have been involved in digitization initiatives for well over a decade, so it is useful to start with a synopsis of prevailing preservation standards and best practices for digital material.50

The term digital imaging entered the library lexicon predominantly through the preservation community, which had an early interest in using digitization as a reformatting tool. Most of the initial projects approached the image-quality issue from a perspective that was heavily influenced by microfilming requirements and micrographic industry standards. The recommendations that came out of 1990s image-benchmarking studies still influence discussions about image quality. These requirements were developed to ensure that a book would need to be digitized only once. The goal was to create “preservation-worthy” images that would be faithful reproductions of the original material and rich enough in quality to justify investing in their long-term archiving. There was also an emphasis on using digitization techniques that would minimize damage to original materials. Although user needs were reflected in the benchmarks, most of the effort was directed at capturing as much detail and nuance from the print materials as possible. A difference was drawn between master (archival) images, which are optimized for longevity and repurposing, and derivative (access) files, which support specific uses, such as printing or online viewing. There was strong endorsement for using lossless compression for the master images,51 and TIFF became the de facto archival format.

The digitization efforts of the early 1990s resulted in well-endorsed best practices and benchmarks that have been widely adopted. However, today’s digitization efforts challenge some of the prevailing practices not only because of their scale but also because of the transformation of digital library technologies and user preferences.

- Doing Today’s Job with Yesterday’s Tools

Imaging devices have improved since the mid-1990s; however, we continue to rely on the results of early work on assessing digitization devices and image quality. Early efforts emphasized fixed spatial resolution and bit depth, a quantitative approach that does not always indicate the capture quality of digitization equipment. The same resolution setting, such as 600 dpi, in two machines may render different results. As Puglia and Rhodes point out, the focus has shifted to high spatial resolution and high bit sampling.52 The trend is moving from testing the capabilities of digitization equipment to assessing specific device performance parameters. High spatial resolution and bit depth are ideal; however, they alone do not guarantee satisfactory images. Therefore, more emphasis must be placed on assessing outcomes. - Role of Image-Quality Targets

To verify the calibration of the scanning equipment and to ensure the best-possible images, early initiatives used image-quality targets. Libraries required delivery of specified scanned technical targets during the installation and configuration of scanning equipment, and they relied on these targets during the production of images to assess resolution, tonality, dynamic range, noise, and color. These targets were also seen as instrumental in preserving technical information that may be needed for certain future preservation actions, such as file migration.53

Today, LSDIs do not consistently use such quality targets. This may lead to a lack of common protocols in assessing image quality and to making adjustments (e.g., changing color space). The Digital Image Conformance Evaluation (DICE) tool being developed at the Library of Congress by Don Williams, Peter Burns, and Michael Stelmach is promising and will result in an assessment target and associated software for automated analysis.54 - File Format and Compression

TIFF has been the de facto file format for archival copies of digital images since the early 1990s.55 Adobe Systems controls the TIFF specification, which has not had a major update since 1992.56 TIFF has many advantages, such as support of lossless compression, which is strongly favored by the preservation community because it retains full pixel information. However, some institutions engaged in large-scale efforts are considering a switch to JPEG2000, which can be lossy or lossless depending on the compression algorithm used.57 It is an International Standards Organization (ISO) standard and permits a wide range of uses. It allows metadata to be built into the file. Other advantages include scalability by resolution (several resolution levels are included in one file to support different views), availability of color channels to manage color appearance information, and bit-depth support up to 48 bits.58 JPEG2000 uses a compression technique based on wavelet technology, which produces smaller file sizes that are more efficient to store, process, and transfer than large files are. However, the standard is not yet commonly used and there is not sufficient support for it by Web browsers. The number of tools available for JPEG2000 is limited but continues to grow.

3.2.2 Preservation Metadata

PREservation Metadata: Implementation Strategies (PREMIS) defines preservation metadata as “the information a repository uses to support the digital preservation process.”59 It includes data to support maintaining viability, renderability, understandability, authenticity, and identity in a preservation context. Although the theory and standards behind preservation metadata are sound, its long-term cost-effectiveness and utility remain unknown.

Preservation metadata incorporates a number of metadata categories, including descriptive, administrative (including rights and permissions), technical, and structural. PREMIS emphasizes recording digital provenance (the history of an object). Documenting the attributes of digitized materials in a consistent way makes it possible to identify the provenance of an item as well as the terms and conditions that govern its distribution and use. In digitization initiatives with homogeneous and consistent practices, it may be sufficient to capture preservation metadata at the collection level without recording details at page level.

Although incorporated in preservation metadata, technical metadata merits special mention because of its role in supporting preservation actions. Published in 2006, ANSI/NISO Z39.87 Technical Metadata for Still Images lays out a set of metadata elements to facilitate interoperability among systems, services, and software as well as to support continuing access to and long-term management of digital image collections.60 It includes information about basic image parameters, image quality, and the history of change in document processes applied to image data over the life cycle. The strength and weakness of Z39.87 is its comprehensive nature. Although in many ways an ideal framework, it is complex and expensive to implement, especially at the image level. While most of the technical metadata can be extracted from the image file itself, some data elements relating to image production are not inherent in the file and need to be added to the preservation metadata record.61 Google does not allow access to its digitization centers because of the proprietary hardware and software in use. Therefore, it may not be possible to gather certain technical specifications for image production in its LSDI. The role of technical metadata (or lack thereof) in facilitating preservation activities is not yet well documented.

3.2.3 Descriptive and Structural Metadata

It is difficult to consider an image to be of high quality unless there is requisite metadata to support identification, access, discovery, and management of digital objects.62 Descriptive metadata ensures that users can easily locate, retrieve, and authenticate collections. The current LSDIs rely on bibliographic records extracted from local Online Public Access Catalogs (OPACs) for descriptive metadata. Compared with early digitization initiatives, minimal structural metadata are captured. There is an effort to promote the use of persistent IDs both by search engines and by participating libraries to ensure that globally unique IDs are assigned to digitized books.

Structural metadata facilitates navigation and presentation of digital materials. It provides information about the internal structure of resources, including page, section, chapter numbering, indexes, and table of contents. It also describes relationships among materials and binds the related files and scripts through file naming and organizing files in system directories. Current LSDI digitization processes often do not capture structuring tags such as title page, table of contents, chapters, parts, errata, and index. Gathering and recording such data are usually neither feasible nor cost-effective within an LSDI workflow. It is important to include structural metadata in the definition and assessment of digital object quality. For example, checking the availability of structural metadata for complex materials such as multivolume books is critical to retaining the relationship information among multiple volumes.

3.2.4 Quality Control

Quality control (QC) is an essential component of library digitization initiatives.63 It includes procedures and techniques to verify the quality, accuracy, and consistency of digital products encompassing images, OCR output, and other metadata files. The key factors in image-quality assessment are resolution, color and tone, and overall appearance. Intent, such as reproducing a physical item, restoring to original appearance (e.g., removing stains), improving legibility, or optimizing for Web presentation or printing, is also important.

Sometimes a distinction is drawn between quality control and quality review (or quality assurance). The former refers to the vendor or in-house inspection conducted during production; the latter indicates the inspection of final products by project staff. (In this paper, QC will be used to refer to both processes.) Implementing a QC program can be very time- and labor-intensive, and requires special skills and equipment. Although automated tools64 exist for inspecting certain aspects of quality (such as file naming and integrity checks), some quality elements, such as missing pages and imaging distortions, can be detected only through visual inspection.

The initial QC efforts of the library community were quite thorough and often involved 100 percent QC, with visual digital and print page comparison looking for subtle indicators such as wavy patterns, bandings, and Newton’s rings. Today, there are well-established image-quality assessment processes; however, they are based on digitizing small subsets of library materials. Although there is some reliance on automated QC tools, most quality assurance is done manually. Owing to the sheer volume of digitized content, it is not realistic to implement the kind of QC program used in past projects. As institutions convert some 10,000–40,000 books per month, it is clear that QC practices need to be reevaluated to decide what best suits the budget, technical infrastructure, staff qualifications, materials, and project time line.

Currently, the Google initiative is not correcting images based on QA procedures conducted by the participating libraries. However, it gathers library-partner feedback on image condition in correlation with its own analysis to create automated QC methods to improve image quality. As indicated in the survey results in the Appendix, the participating libraries are mainly recording trends and patterns, for the purpose of improving the quality of future scans. Microsoft and the OCA have workflows in place for correcting images based on the feedback received from participating libraries.

The Google initiative often has been criticized for producing scans with missing pages or poor image quality (e.g., blurs or other markings). Townsend argues that a closer look at the digitized materials on the Google Books site raises concerns about the variability of image quality and erroneous or incomplete metadata, especially in serials literature.65 He is concerned that these problems will compound over time and that it may be difficult to go back and make corrections when the imperative is to move forward. Errors and omissions may become an important issue, especially if there is full reliance on digital copies and print versions are not readily available. Of course, even if the quality is inconsistent, the digitized books support discovery and can provide some level of emergency backup if something were to happen to the print books. However, inconsistent quality and gaps pose a serious preservation issue if the digitized books are used as an excuse to discard all the original books.

The digitization process captures the page image, and OCR tools are required to extract the text in a machine-readable form. The term OCR generally refers to the process by which scanned images are electronically “read” to convert them into text to support full-text searching and other processes that require editable text. Although OCR used to be optional (often because of funding constraints) in digitization projects, today’s LSDIs automatically include such a process in order to create sophisticated full-text indexes to enable retrieval of materials by keyword.

The accuracy of OCR depends on the quality of images and the capabilities of OCR engines in processing different font types, languages, and such. This is particularly true for older books with ancient and pale fonts. Obtaining accuracy close to 100 percent usually requires some level of manual correction. Human intervention in large-scale efforts is minimal, so OCR files do not typically go through a quality control process to identify errors. Some experts believe that the 98-99 percent accuracy achieved by automated OCR is good enough to meet indexing and discovery needs. Other scholars, such as Jean-Claude Guedon, have expressed concern that centuries of progress toward increasingly accurate and high-quality printing could be reversed owing to lack of OCR quality control and high standards for LSDIs.66

3.3 Technical Infrastructure

Numerous factors put digital data at risk. Many technologies disappear as product lines are replaced, and backward compatibility is not always guaranteed. The vulnerable elements of the technical infrastructure for digital image collections include the following:

- storage media: at risk for mishandling, improper storage, data corruption, physical damage, or obsolescence

- file formats and compression schemes: at risk for obsolescence

- various application, Internet protocol, and standard dependencies: at risk for impact of updates and revisions on dependent processes and operations

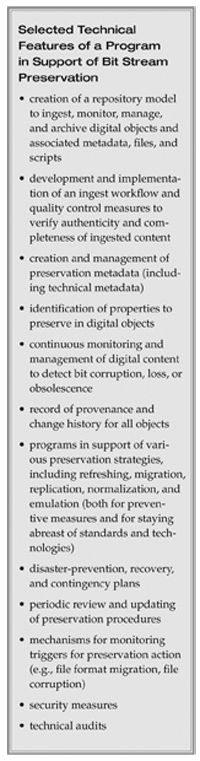

The sidebar at left lists some of the technical procedures involved in preserving digital content. The curatorial strategies listed are being addressed in various library forums. Digital preservation infrastructure relies on a robust computing and networking infrastructure and a scalable storage strategy. This section primarily addresses storage issues, which pose a major challenge for data management and storage architectures because of the sheer amount of data presented by LSDIs.

E-science data initiatives have introduced libraries to the challenges associated with large-scale database storage and retrieval.67 Nonetheless, many participating libraries still have limited experience in data management. An extensive review by the British Library revealed that storage technologies continue to evolve and that data-storage vendors are coming up with new standards and solutions.68 This trend requires the development of solutions that can accommodate expanding content and emerging storage technologies. The findings of the recent Getty Research Institute survey also pointed out the need to rethink infrastructure and storage models.69

According to the Getty international survey of digital preservation systems, 66 percent of the 316 institutions surveyed had less than 10 terabytes (Tb) of data, and their storage costs ranged from $400 to $15,000 per Tb. The wide range reflects the lack of common metrics in projecting and reporting such expenses. With the exception of two sites that store video, Digital Library Federation (DLF) members representing many LSDIs that responded to the survey reported storing data in the range of 2–20 Tb. Cornell University Library’s copy of each digital book created through the Cornell-Microsoft partnership is approximately 700 megabytes (Mb). Based on this per-book estimate, Cornell anticipates having to store approximately 60 Tb of digital content, representing nearly 100,000 volumes, during the first year of the initiative. By comparison, Cornell accumulated only 5 Tb of content through 15 years of digital imaging activities.

Currently there is neither a metric nor a methodology for estimating resources required for storage. Moreover, storage expenses depend on local information technology (IT) and repository infrastructures and configuration, making generalizations difficult. As libraries acquire more and more digital content, it will be important to understand the current and projected costs of storage.70 While the storage hardware costs can be obtained from vendors, there is little detailed information on the operational costs associated with storage. Factors that influence overall costs include quantity of data, server configuration, storage media, storage-management software, projected data-storage needs, data-access time, data-transfer rate, access services supported, and redundancy and backup protocols. Life Cycle Information for E-Literature (LIFE), a JISC-funded joint venture, developed a methodology to calculate the long-term costs and future requirements of preserving digital assets.71 Project staff found that it costs £19 (about US$38) to store and preserve an e-monograph in Year 1; by the tenth year, the total life cycle cost is £30 (US$51). These costs include acquisition, ingest, basic metadata, access, storage, and preservation, but do not include creation. It is important to be cautious about generalizing the LIFE estimates as they are based on a small file of approximately 1.6 Mb using specific workflow and process, as compared with the estimated 700 Mb per digital book created through the Cornell-Microsoft partnership.

An assessment by the National Archives of Sweden revealed that storage media represent only 5 percent to 10 percent of total storage expenses; the bulk of costs are associated with hardware, software, support, maintenance, and administration.72 Some libraries, such as Cornell University Library, pay their home institutions for bandwidth consumed (network usage-based billing), adding yet another storage-cost element. The bandwidth charge for transferring a digital book online from Victor, New York (Kirtas), to Cornell is about 95 cents.73 Scaling this estimate to 100,000 books results in an anticipated additional project cost of nearly $95,000.74

Storage requirements need to be assessed from an information life cycle management perspective to ensure a sustainable storage strategy that balances costs, data management strategies, preservation priorities, and changing use patterns. The life cycle approach requires more complex criteria for storage management than do automated storage procedures, such as in hierarchical storage management.75 There is also an increasing emphasis on the virtue of distributed preservation services, such as replicating content at different locations.

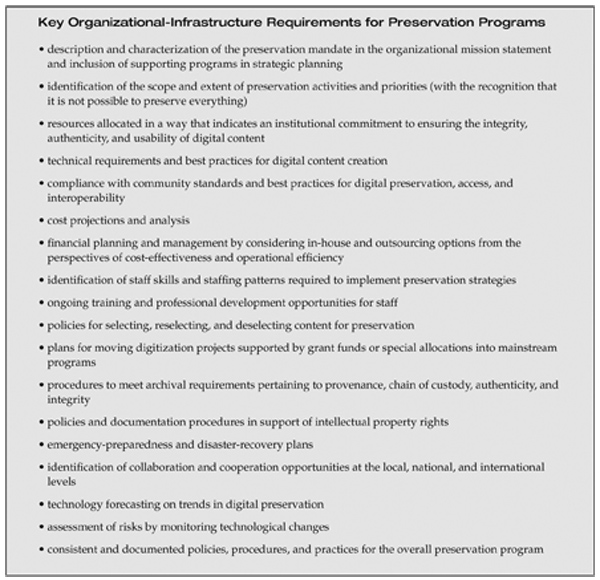

3.4 Organizational Infrastructure

Technology alone cannot solve preservation problems. Institutional policies, strategies, and funding models are also important. Although library forums began addressing digital preservation concerns almost a decade ago, only a handful of libraries today have digital preservation programs that can adequately support large-scale ingest and repository development efforts. Clareson illustrates the gap between digitization and digital preservation practice by pointing out that “except for inclusion in rights and licensing policies, digital holdings are not included in the majority of policy statements for many areas of institutional operation, from mission and goals to emergency preparedness, to exhibit policies.”76 The challenge is not only to incorporate the preservation mandate in organizational mission and programs but also to characterize the goals in a way that will make it possible to understand the terms and conditions of such a responsibility. For example, a long-term archiving mandate is likely to have different requirements than does archiving in support of short-term goals. There are also significant differences between a preservation program that focuses on bitstream preservation and one that encompasses the processes required to provide enduring access to digital content.

|

Organizational preservation infrastructure-mandate, governance, and funding models-is emerging as a critical factor in determining success. The box above lists the organizational infrastructure requirements needed to support preservation programs. There are several formal standards and best practices in place.77 The following are some examples:

- Open Archival Information System (OAIS). The OAIS reference model addresses a full range of preservation functions, including ingest, archival storage, data management, access, and dissemination.78 Specifically applicable to organizations with long-term preservation responsibilities, it has provided a framework and a common language for digital preservation discussions and planning activities, especially for their technical and architectural aspects.

- Trustworthy Repositories Audit & Certification (TRAC). An OCLC/RLG Programs and National Archives and Records Administration (NARA) task force developed the Audit Checklist for Certifying Digital Repositories as a tool to assess reliability, commitment, and readiness of institutions to assume long-term preservation responsibilities.79 With the revision and publication of the tool as the TRAC checklist in March 2007, the Center for Research Libraries expressed its intention to contribute to related digital repository audit and certification, including guiding further international efforts on auditing and certifying repositories.80

- Digital Repository Audit Method Based on Risk Assessment (DRAMBORA). Released in March 2007 for public testing and comment, the DRAMBORA toolkit aims to facilitate internal audit by providing preservation administrators with a means to assess their capabilities, identify their weaknesses, and recognize their strengths.81

- Defining Digital Preservation. A working group of the Preservation and Reformatting Section of the Association for Library Collections and Technical Services of the American Library Association (ALA) is drafting a standard operational definition for digital preservation that would be used in policy statements and other documents.82

Although the aforementioned tools and standards are instrumental, digital preservation programs at many libraries and cultural institutions are still in pilot or test modes. In a 2007 review of digital preservation readiness studies, Liz Bishoff stressed the importance of expanding educational opportunities for staff involved in preservation and curation programs.83Such opportunities are critical to integrate the digital preservation tools and emerging standards into daily practice.

FOOTNOTES

29 A summary of early selection approaches is provided in the following article:Janet Gertz. 1998. Selection Guidelines for Preservation. Joint RLG and NPO Preservation Conference: Guidelines for Digital Imaging. Available at http://www.rlg.org/preserv/joint/gertz.html.

30 Association of Research Libraries. 2004. “Recognizing Digitization as a Preservation Reformatting Method.” ARL: Bimonthly Report 236. Available at http://www.arl.org/bm~doc/digpres.pdf.

31 These data are from unpublished work by Lynn Silipigni Connaway and Edward T. O’Neill, cited in Lorcan Dempsey. 2006. “Libraries and the Long Tail: Some Thoughts about Libraries in a Network Age.” D-Lib Magazine 12(4). Available at http://www.dlib.org/dlib/april06/dempsey/04dempsey.html.

32 Chris Anderson. 2004. “The Long Tail.” Wired 12(10). Available at http://www.wired.com/wired/archive/12.10/tail.html.

33 Lorcan Dempsey. 2006. “Libraries and the Long Tail: Some Thoughts about Libraries in a Network Age.” D-Lib Magazine 12(4). Available at http://www.dlib.org/dlib/april06/dempsey/04dempsey.html.

34 There are exceptions. For instance, Cornell’s partnership with Microsoft includes the digitization of in-house, special collections held at the Rare and Manuscript Collections.

35 OCLC/RLG Programs, Harmonizing Digitization Program: http://www.rlg.org/en/page.php?Page_ID=21020.

36 “The ‘Google Five’ Describe Progress, Challenges.” 2007. Library Journal Academic Newswire (June). Available at http://www.libraryjournal.com/info/CA6456319.html.

37 Brian Lavoie, Lynn Silipigni Connaway, Lorcan Dempsey. 2005. “Anatomy of Aggregate Collections: The Example of Google Print for Libraries.” D-Lib Magazine 11(9). Available at http://www.dlib.org/dlib/september05/lavoie/09lavoie.html.

30 Based on the Functional Requirements for Bibliographic Records (http://www.ifla.org/VII/s13/frbr/frbr.htm), a work is a distinct intellectual or artistic creation. The study included all the holdings in participating libraries and was not limited to materials selected for digitization at LSDI institutions.

39 Ben Bunnel. 2007. Librarian Wanted: Part II. Blog posted March 22 to Google Librarian Central. Available at http://librariancentral.blogspot.com/2007/03/librarian-wanted-part-ii.html.

40 DLF/OCLC Registry of Digital Masters: http://www.oclc.org/digitalpreservation/why/digitalregistry/. The registry database is available at http://purl.oclc.org/DLF/collections.

41 Information about the decision of libraries to contribute both public domain and in-copyright material is obtained from their press releases and aforementioned FAQs.

42 Brian Lavoie, Lynn Silipigni Connaway, Lorcan Dempsey. 2005. “Anatomy of Aggregate Collections: The Example of Google Print for Libraries.” D-Lib Magazine 11(9). Available at http://www.dlib.org/dlib/september05/lavoie/09lavoie.html.

43 Section 108 provides exceptions that allow libraries and archives to undertake certain activities, otherwise not permitted, for purposes of preservation and, in some cases, replacement. There is intense discussion about the elements of Section 108 in regard to who can do what for what purposes and under what conditions. Circular 92: http://www.copyright.gov/title17/92chap1.html#108.

44 Section 108 Study Group: http://www.loc.gov/section108/.

45 Peter Hirtle analyzes a range of preservation-related issues and concludes that copyright in regard to digital preservation is an especially murky area because it is necessary to copy digital information in order to preserve it. See http://fairuse.stanford.edu/commentary_and_analysis/2003_11_hirtle.html.

46 Section 4.11 (Release of In-Copyright Works Held in Escrow) of Google’s agreement with CIC states that Google will hold the “University Copy” of scanned works. It lists the conditions under which the copies will be released to the contributing libraries, including if the in-copyright work becomes public domain or if the library party has obtained permission through contractual agreements with copyright holders. See http://www.cic.uiuc.edu/programs/CenterForLibraryInitiatives/Archive/PressRelease/LibraryDigitization/AGREEMENT.pdf.

47 The Metadata Encoding and Transmission Standard (METS) schema is used for encoding descriptive, administrative, and structural metadata for digitized pages. More information about METS is available at http://www.loc.gov/standards/mets/. MIX is an XML schema developed for recording and managing the Technical Metadata for Digital Still Images (ANSI/NISO Z39.87-2006). The standards page is at http://www.loc.gov/standards/mix/.

48 The Scribe system used by the OCA requires a manual operator to turn pages and monitor the images. OCA Scribe includes two commercial-grade cameras, and the open books rest at 90° on an adjustable spring cradle.

49 David Bearman offers an opinion piece on Jean-Noël Jeanneney’s comments on the Google initiative. One of the critiques presented by Jeanneney is Google’s sloppy imaging of books because its primary interest is harvesting works to link to advertising. See David Bearman. 2006. “Jean-Noël Jeanneney’s Critique of Google: Private Sector Book Digitization and Digital Library Policy.” D-Lib Magazine12(12). Available at http://www.dlib.org. On the basis of a case study, Paul Duguid has pointed out problems with scans, metadata, and edition information in Google Book Search. He concludes that Google’s powerful search tools cannot make up for a lack of metadata. See Paul Duguid. 2007. “Inheritance and Loss? A Brief Survey of Google Books.” First Monday 12(8) [August]. Available at http://www.firstmonday.org/issues/issue12_8/duguid/. The discussion as a reaction to the article is available at http://radar.oreilly.com/archives/2007/08/the_google_exch.html.

50 For a thorough review of the digitization approaches used during the past 10 years, see Steven Puglia and Erin Rhodes. 2007. “Digital Imaging: How Far Have We Come and What Still Needs to be Done?” RLG DigiNews 11(1) [April 15]. Available at http://www.rlg.org/en/page.php?Page_ID=21033.

51 For a discussion on the difference between lossy and lossless compression, see Moving Theory into Practice: Digital Imaging Tutorial, Cornell University Library. Available at http://www.library.cornell.edu/preservation/tutorial/intro/intro-07.html.

52 Puglia and Rhodes 2007 op cit.

53 For example, Macbeth ColorChecker is used to inspect color fidelity and to control color space during file format migration and other image processing activities.

54 The information about DICE is based on e-mail correspondence with Don Williams, technical imaging consultant, standards and image quality, in July 2007.

55 The 2006 report Digital Image Archiving Study, issued by the Arts and Humanities Data Service, includes a comprehensive discussion of various raster image file formats and reviews their advantages and disadvantages for preservation purposes. Available at http://ahds.ac.uk/about/projects/archiving-studies/digital-images-archiving-study.pdf.

The March 2007 CENDI report assessed file formats for preserving government information. It is not focused on digital images; however, it is a useful document for understanding format assessment factors for digital preservation. CENDI Digital Preservation Task Group. March 2007. Formats for Digital Preservation: A Review of Alternatives. Available at http://www.cendi.gov/publications/CENDI_PresFormats_WhitePaper_03092007.pdf.

56 TIFF: http://partners.adobe.com/public/developer/tiff/index.html.

57 JPEG2000 file format information: http://www.jpeg.org/jpeg2000/.

58 For an in-depth discussion of file formats, see Tim Vitale. Digital Image File Formats—TIFF, JPEG, JPEG2000, RAW, and DNG. July 2007, Version 20. Available at http://aic.stanford.edu/sg/emg/library/pdf/vitale/2007-07-vitale-digital_image_file_formats.pdf.

59 PREMIS: http://www.oclc.org/research/projects/pmwg/.

60 Z39.87: Data Dictionary—Technical Metadata for Digital Still Images. Available at http://www.niso.org/standards/standard_detail.cfm?std_id=731.

61 Metadata-extraction tools such as JHOVE and NLNZ Metadata Extractor Tool generate standardized metadata that is compliant with PREMIS and Z39.87.

62 The NARA Technical Guidelines for Digitizing Archival Materials for Electronic Access define approaches for creating digital surrogates for facilitating access and reproduction; they are not considered appropriate for preservation reformatting to create surrogates that will replace original records. See Steven Puglia, Jeffrey Reed, and Erin Rhodes. June 2004. Technical Guidelines for Digitizing Archival Materials for Electronic Access: Creation of Production Master Files Raster Images. Available at http://www.archives.gov/preservation/technical/guidelines.pdf.

63 Oya Y. Rieger. 2000. “Establishing a Quality Control Program.” Pp. 61-83 in Moving Theory into Practice: Digital Imaging for Libraries and Archives, by Anne R. Kenney and Oya Y. Rieger. Mountain View, Calif.: Research Libraries Group.

64 For example, Cornell University Library uses a locally developed application to automatically check images matching MD5 checksums, availability of OCR and position data, detection of blank pages, etc.

65 Robert B. Townsend. 2007. “Google Books: What’s Not to Like?” AHA Today (April 30). Available at http://blog.historians.org/articles/204/google-books-whats-not-to-like. Also see footnote 50.

66 U.S. National Commission on Libraries and Information Science. Mass Digitization: Implications for Information Policy. Report from Scholarship and Libraries in Transition: A Dialogue about the Impacts of Mass Digitization Projects. Symposium held March 10-11, 2006, at the University of Michigan, Ann Arbor. Available at http://www.nclis.gov/digitization/MassDigitizationSymposium-Report.pdf.

67 The following article covers the storage and networking challenges faced by educational institutions in supporting the emerging technical infrastructures: Thomas J. Hacker and Bradley C. Wheeler. 2007. Making Research Cyberinfrastructure a Strategic Choice. Educause Quarterly 30(1): 21-29. Available at http://www.educause.edu/ir/library/pdf/EQM0713.pdf.

68 Jim Linden, Sean Martin, Richard Masters, and Roderic Parker. February 2005. The Large-Scale Archival Storage of Digital Objects. Digital Preservation Coalition Technology Watch Series Report 04-03. Available at http://www.dpconline.org/docs/dpctw04-03.pdf.

69 The International Digital Preservation Systems Survey conducted by Karim Boughida and Sally Hubbard from the Getty Research Institute intended to provide an overview of digital preservation system implementation. Comments are based on e-mail exchange with Boughida in June 2007. In the context of the survey, a digital preservation system is defined as an assembly of computer hardware, software, and policies equivalent to a trusted digital repository with a mission of providing reliable, long-term access to managed digital resources.

70 Moore et al. describe current estimates of both disk and tape storage based on operational experience at the San Diego Supercomputer Center, which operates a large-scale storage infrastructure. See Richard L. Moore, Jim D’Aoust, Robert McDonald, and David Minor. 2007. “Disk and Tape Storage Cost Models.” Archiving (May): 29-32.

71 The LIFE Project: Lifecycle Information for E-Literature. 2006. Available at http://www.ucl.ac.uk/ls/life/.

72 Jonas Palm. The Digital Black Hole. 2006. Stockholm: Riksarkivet. Available at http://www.tape-online.net/docs/Palm_Black_Hole.pdf.

73 The average size of a digitized book is about 700 megabytes.

74 As an alternative to direct network transfers, the storage team has explored the methods and costs involved in shipping external hard drives or high-storage computers loaded with page image files between Victor and Ithaca. Using physical storage devices for transportation is a cumbersome, risky, and expensive process. In addition to insecurities of physical transport, there are hardware incompatibilities between the Microsoft and Sun platforms.

75 Hierarchical storage management is a data-storage method to ensure cost-effectiveness based on data-usage patterns. The system monitors how data are used and automatically moves data between high-cost (faster devices) and low-cost (slower devices) storage media.

76 Tom Clareson. 2006. “NEDCC Survey and Colloquium Explore Digitization and Digital Preservation Policies and Practices.” RLG DigiNews 10(1) [February]. Available at http://www.rlg.org/en/page.php?Page_ID=20894.

77 A review of prevailing preservation standards and protocols is discussed in Nancy Y. McGovern. 2007. “A Digital Decade: Where Have We Been and Where Are We Going in Digital Preservation?” RLG DigiNews 11(1) [April 15]. Available at http://www.rlg.org/en/page.php?Page_ID=21033#article3.

78 ISO 14721:2003 OAIS : http://www.iso.org/iso/en/CatalogueDetailPage.CatalogueDetail?CSNUMBER=24683&ICS1=49&ICS2=140&ICS3.

79 Audit Checklist for Certifying Digital Repositories: http://www.rlg.org/en/page.php?Page_ID=20769.

80 Core Requirements for Digital Archives: http://www.crl.edu/content.asp?l1=13&l2=58&l3=162&l4=92.

81 UK Digital Curation Centre (DCC) and Digital Preservation Europe (DPE). Digital Repository Audit Method Based on Risk Assessment. March 2007. Available at http://www.repositoryaudit.eu/.

82 Defining Digital Preservation: http://blogs.ala.org/digipres.php.

83 Liz Bishoff. 2007. “Digital Preservation Assessment: Readying Cultural Heritage Institutions for Digital Preservation.” Paper presented at DigCCurr 2007: An International Symposium in Digital Creation, April 18–20, 2007, Chapel Hill, N.C. Available at http://www.ils.unc.edu/digccurr2007/papers/bishoff_paper_8-3.pdf.