Tools for Humanists Project

Final Report

April 18, 2008

Council on Library and Information Resources

Lilly Nguyen and Katie Shilton

Contents

1. Introduction—Why Tools?

2. Research Questions

3. Methodology

4. Results and Observations

5. Recommendations

6. Lessons Learned and Implications for Future Research

7. Bibliography

8. Appendixes

1. Introduction—Why Tools?

Digital tools are an important component of the cyberinfrastructure that supports digital humanities research (UVA 2005). Tools for humanities research-software or computing products developed to provide access, interpret, create, or communicate digital resources-are increasingly developed and supported by Digital Humanities Centers (DHCs) and the wider digital humanities community. Such tools represent a significant investment of research and development time, energy, and resources.

Tools are distinct from other assets developed and cultivated by DHCs. These additional assets include resources and collections. Researchers use tools to access, manipulate, or interpret resources or collections, while resources are “data, documents, collections, or services that meet some data or information need” (Borgman 2007). In a recent report on cyberinfrastructure for the humanities and social sciences, the American Council of Learned Societies (2006) distinguished tools from collections by emphasizing that tool building is dependent on the existence of collections. Resources or collections may be associated with a tool and may serve as an indicator of a tool’s functionality and value, but are not themselves tools. As such, we define tools as software developed for the creation, interpretation, or sharing and communication of digital humanities resources and collections.

Because tools provide the action (rather than the subject) of digital humanities research, digital tools are one of the most extensible assets within the digital humanities community. Researchers can share tools to perform diverse and groundbreaking research, making them a critical part of the digital humanities cyberinfrastructure. If these tools are not visible, accessible, or understandable to interested researchers, they become less likely to be used broadly, less able to be built upon or extended, and therefore less able to support and broaden the research for which they are intended. CLIR’s interest in supporting the cyberinfrastructure of digital humanities has spurred us to evaluate the landscape of digital tools available for humanities research.

2. Research Questions

CLIR’s concern for accessibility and clarity of tools is based on a larger study of the characteristics of digital humanities centers that frequently make their tools available to researchers. This context prompted the two research questions that guide this evaluation project.

RQ1: How easy is it to access DHC tools?

RQ2: How clear are the intentions and functions of DHC tools?

The evaluation research outlined in this report answers these questions by delineating variables that respond to the goals of accessibility and clarity. We use these variables to evaluate a purposive sample of 39 digital humanities tools.

3. Methodology

Following a scope of work provided by CLIR, this evaluation project focused on defining elements that contribute to findable, usable digital tools and on ranking existing DHC tools according to these elements. Our first challenge was to clarify the definition of digital tool through a literature review on cyberinfrastructure and digital humanities. This allowed us to refine and define distinct characteristics of digital tools and to delineate a sample set of tools hosted by the DHCs listed in Appendix B (page 48). Section 3.a. details this first phase of the evaluation research.

Once we had determined our sample, the next step was to create an evaluation framework and instrument. Concentrating on CLIR’s evaluation interests of findability and usability, we surveyed our sample of 39 tools and looked for elements that made tools easy to access and understand. We describe the process of creating an evaluation framework in Section 3.b.

After drafting our sample set and scales, we submitted an evaluation strategy to CLIR for approval. We then performed several trial evaluations to check for interindexer consistency. This is detailed in Section 3.b.iii. After several iterations of this consistency check, we divided the 39 tools in half, and each researcher evaluated her assigned tools. We then combined our data and began the data analysis, described in Section 4.

3.a. Definitions, Sample, Limitations, and Assumptions

3.a.i. Definitions

We defined tools for humanists as software intended to provide access to, create, interpret, or share and communicate digital humanities resources. Further, the tools evaluated in this project are products of the digital humanities community and are designed to be extensible, that is, used with resources beyond those provided by the creating institution. We grounded this definition within a typology of digital tools drawn from the wider digital humanities literature. Our typology defines tools according to three dimensions: objectives, technological origins, and associated resources.

Tools as defined by objectives

Based on digital humanities literature, researchers use digital tools for the following objectives:

- access and exploration of resources: to make specialized content “intellectually as well as physically accessible” (Crane et al. 2007); and

- insight and interpretation: to enable the user to find patterns of significance and to interpret those patterns (ACLS 2006).

In addition, based on our observation of DHCs in the United States, we propose additional tool objectives:

- creation: to make new digital objects or digital publications from humanities resources; and

- community and communication: to share resources or knowledge.

These four objectives guided the selection of our sample tools from the DHC sites identified by Diane Zorich’s work. These objectives suggest future evaluations of tools that extend beyond accessibility and clarity to evaluate how well DHC tools support and facilitate these critical functions. Given CLIR’s interest in questions relating to the clarity of and access to tools, we have not yet explored the use, value, or effectiveness of tools according to these objectives. That is to say, we did not consider issues of performance, which is a promising area for future consideration.

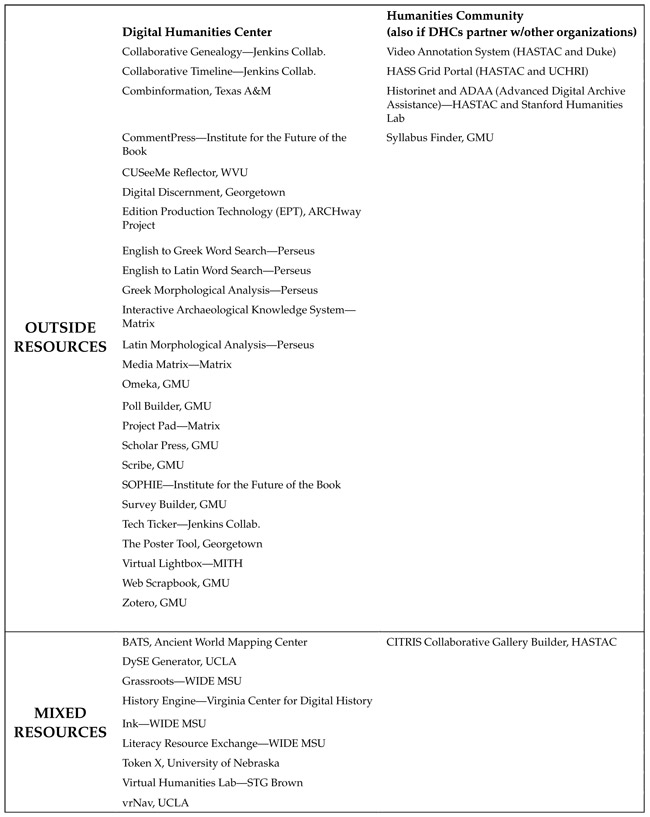

Tools as defined by site of technological origin

On the basis of our observation of DHC Web sites, we found variation in communities of tool authorship. Some tools were the product of a single center (e.g., the Berlin Temporal Topographies built by Stanford Humanities Lab). Some tools started outside of the humanities community, but centers or cooperatives adapted the tools heavily for humanities research (e.g., SyllabusFinder adapted from the Google search engine by George Mason University’s [GMU’s] Center for History and New Media). Some tools were developed outside of the humanities community and appropriated, with little or no modification, by the humanities community (e.g., blogs or wikis). The spectrum of technological origins thus spanned a range from single-center authorship to appropriation from an outside community (see Table 1). We considered only tools authored by a single institution in the digital humanities community or by a collaboration of institutions in the digital humanities community for our final sample group.

Tools as defined by associated resources

Our observation of DHC Web sites also illustrated that tools vary along a spectrum according to the resources with which they interact. Some tools work only with resources provided by the center (e.g., the Women’s Studies Database at the Maryland Institute for Technology in the Humanities). Other tools can interact with resources provided by the center in addition to outside resources (e.g., BATS assistive technology created by the Ancient World Mapping Center). Finally, some tools work exclusively with outside resources, and centers provide no in-house collections for use with the tool (e.g., the Center for History and New Media’s Omeka digital display tool). For our study, we focused on tools that interact (1) with resources provided by the center and outside resources, and (2) with outside resources only. We excluded tools that interact only with in-house resources from our sample based on the view that extensible tools are most useful for researchers, as they allow individuals to explore or analyze their own data and resources. As previously explained, we judged extensible tools to be of the most interest to the broader infrastructure for the digital humanities, as such tools enable broad community use as well as highly customizable, individualized research.

3.a.ii. Evaluation Sample

Given the limitation to extensible tools, we chose to confine our survey of DHC tools to items created by or adapted within the humanities community that were designed for use with outside resources or a mix of outside and indigenous resources. We excluded those tools that had been developed in the outside (nondigital humanities) community or that had been developed to function with only a single collection or resource. This allowed us to narrow our sample to 39 tools for evaluation. Table 1 illustrates how the 39 tools group according to two variables: (1) technological development (developed by a DHC or a humanities community); and (2) associated resources (usable with outside resources or mixed resources).

3.a.iii. Research Limits and Assumptions

This evaluation of tools created by DHCs is part of a much larger CLIR survey of the landscape of DHCs that determined certain features of our study. That survey predetermined the population of centers from which we drew our sample. We identified tools from each of these centers. We excluded certain parameters that we might have considered in defining the scope. Specifically, we did not employ user population as means of selecting the sample of tools to study, and we used a limited understanding of the idea of “findability.”

Based on the literature, we assumed that a wide swath of faculty, independent researchers, university staff, and graduate and undergraduate students utilize humanities cyberinfrastructure (ACLS 2006). Findability bears heavily on questions of accessibility of digital tools and suggests users’ ability to search and find tools without previous knowledge of the tools. As such, findability would consider a wider breadth of information-seeking technologies, including search engines, and would reflect the highly complicated-and more realistic-landscape that users encounter when trying to search and find digital humanities tools. In order to limit our evaluation to dimensions of accessibility and clarity of intention/function within the context of the given DHC settings, we did not evaluate findability of digital tools. We excluded findability in a general sense and focused solely on questions of tool accessibility within these sites. Findability depends upon the metadata associated with tools, as well as on the structure of the system supporting the Web sites that provide the first point of access for users, since Web crawlers (and hence indexing) may go only two or three levels into a site. Thus, evaluating the existing search engines, systems, and metadata structures and standards associated with tools would be valuable follow-up research. Given the limits and needs of CLIR’s research directive, however, findability from outside the Web site was beyond the scope of this project.

We have also made some basic assumptions about users. In order to evaluate accessibility that excludes findability, we assumed users who already know that tools are available, and who know to explore DHC Web sites for tools. We evaluated whether such a user can easily find the tool on a DHC’s Web site, easily understand the tool’s intention, and easily begin using the tool.

Table 1: Matrix of Tools for Evaluation (Final Sample Group)

|

Another assumption made early in our work, and one that has not proved entirely tenable, is that tools could be downloaded. Such tools are easy to envision. They are discrete pieces of software run on a user’s own computer and resources. However, a focus on software download is increasingly irrelevant in an era when both storage and computing power are moving into the “cloud” (i.e., the combined computing power of servers owned by others) (Borgman 2007). We therefore evaluated tools that can be downloaded (e.g., UCLA’s Experimental Technologies Center’s vrNav virtual reality software) as well as tools used online and supported by the servers of others (i.e., the University of Nebraska Center for Digital Research in the Humanities’ Token X text-visualization tool). We also considered the clarity of the process of using tools with data sets or resources-either on a user’s computer or in the cloud-when evaluating the usability of these tools.

3.b. Evaluation Framework and Instrument

We designed this evaluation framework to answer our research questions:

- How easy is it to access DHC tools?

- How clear are the intentions and functions of DHC tools?

Based on these questions, we created two scales:

- Ease of Access: Discovering Tools

- Clarity of Use: Enabling Use of Tools

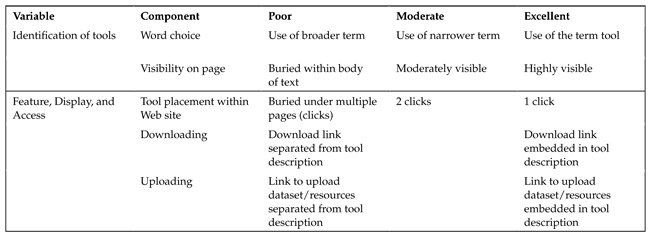

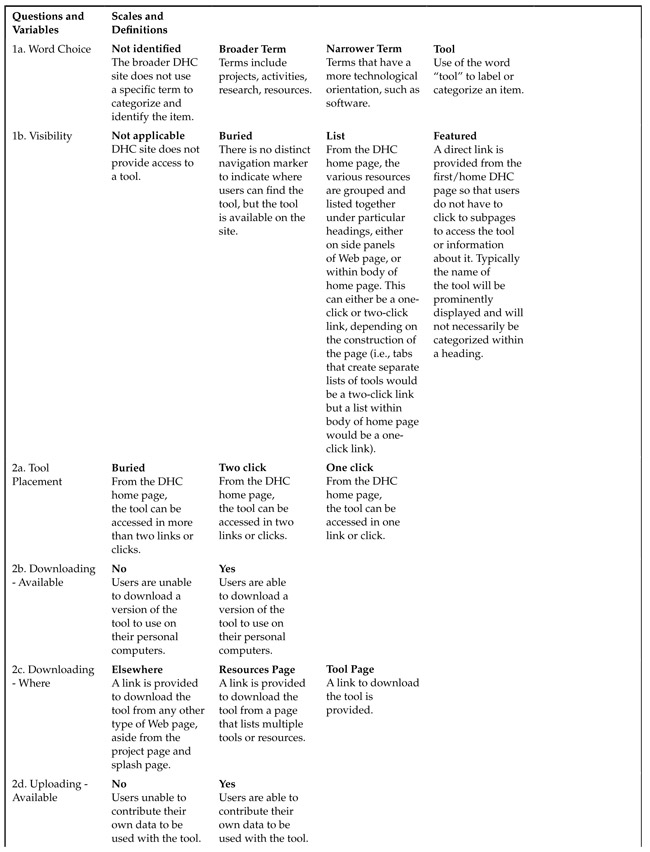

To address these research questions, we developed scales to measure the strength of each of the 39 tools with regard to four variables: (1) identification of tool; (2) feature, display, and access; (3) clarity of description; and (4) clarity of operation. To construct measurable scales, we divided the variables into distinct indicators that we could rank as poor, moderate, or excellent. The next sections describe the indicators and variables. We conclude the evaluation framework with a table that provides details on the entire evaluative schema.

3.b.i. Ease of Access: Discovering Tools

This scale includes variables that represent the process of discovering available tools. Discovering and accessing available tools includes variables such as:

- ways in which DHCs identify tools to users (in terms of language and word choice and visibility on the page); and

- how DHCs feature, display, and provide access to tools on their Web sites through placement within the Web site and access to downloading the tool or uploading data.*

* Exporting the results of data-tool interaction did not seem to be an emphasis in the tools we examined. (For example, tools such as Token X allow users to play with their data on the tool’s site, but without possibility of exporting altered data. However, uploading data is not always a question of uploading data to a tool site. Several tools allow users to download the tool, and then upload data to the tool, but everything stays on a user’s computer. This shows that there are many possible permutations of downloading, uploading, local, and cloud computing.

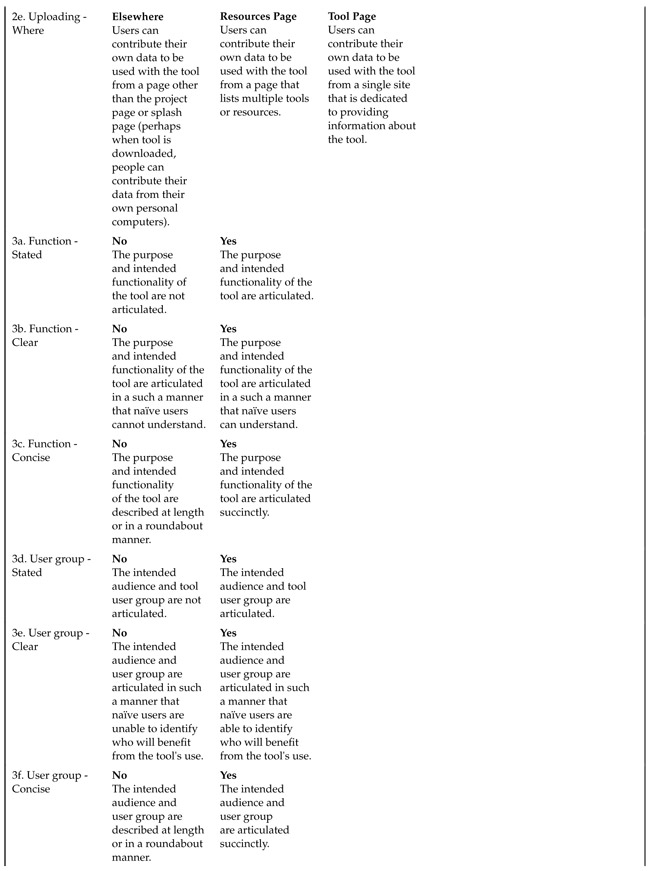

See Table 2.

Table 2: Ease-of-Access Scale

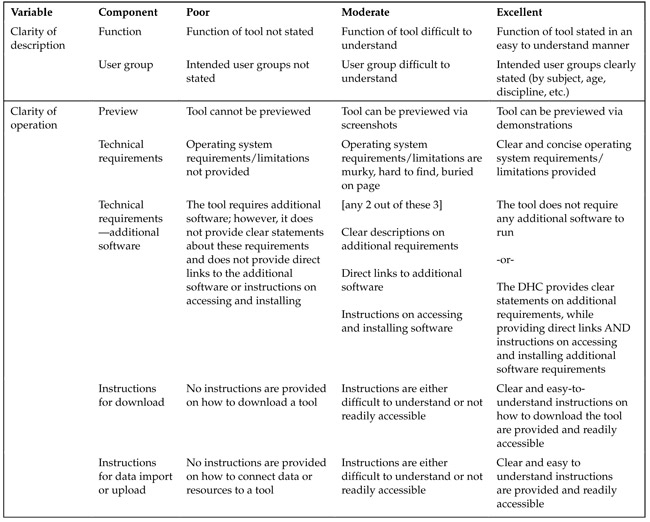

3.b.ii. Clarity of Use-Enabling the Use of Tools

We also evaluated the 39 tools on a scale representing the clarity of the intentions and functions of the tool. This scale depends upon variables that represent the process of interacting with a tool after discovery. Clarity of use variables include :

Clarity of tool description: Are the tool’s functions and target user group clearly and concisely stated? Clear and concise descriptions enable and encourage individuals to use and download the tools.

Clarity of tool operation: Can the tool be previewed? Can most users operate the tool on their systems? Is it clear how users can import or upload their datasets or resources for use with the tool?

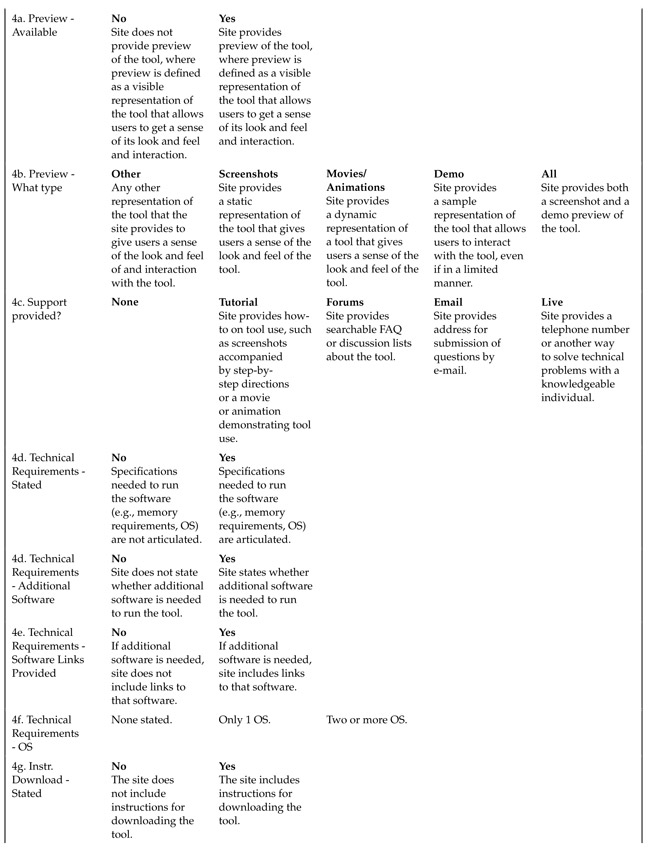

See Table 3.

Table 3: Clarity-of-Use Scale

|

|

3.b.iii. Interindexer Consistency

To assure interindexer consistency, we selected two tools and each researcher (Katie and Lilly) coded the tools independently. After coding, the researchers compared scores. Interindexer consistency after the first evaluation was only 32 percent. To improve consistency, the researchers identified the points of divergence and discussed why they had coded the tools differently. Each researcher explained her justification and definitions. Together, the researchers created a granular, detailed definition of each variable to fully standardize the evaluation metrics (see Appendix F-2 for the granular scale definitions). After two more rounds of evaluation and discussion, during which the researchers each coded a total of seven tools, interindexer consistency reached 100 percent.

4. Results and Observations

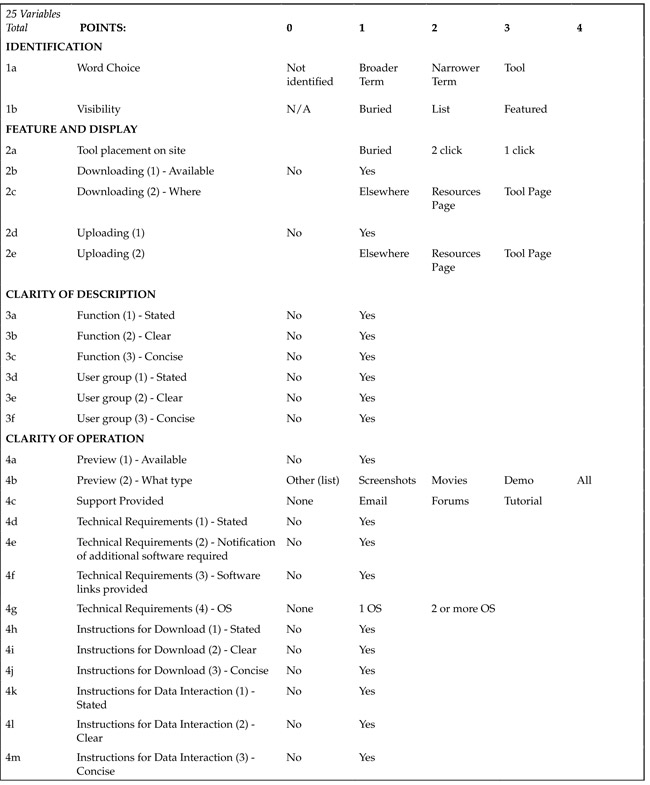

To organize data collection surrounding the variables previously discussed, above, we applied the following data collection instrument to each of the 39 tools. For each variable, we gave tools a numerical score based on the definitions below:

Table 4: Variables Scales

|

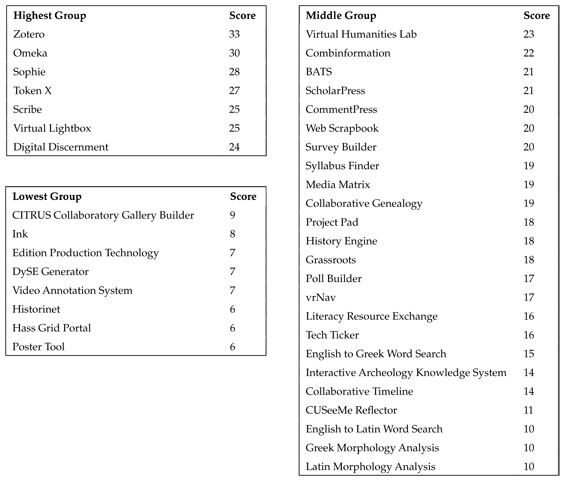

Scores for the 39 tools ranged from 33 points to 6 points. We calculated the mean and standard deviation for the tools’ total scores (x = 17, sd = 7). We then used the standard deviation to analyze the overall distribution of the tools and identify how tool groups would be constructed.

- Tools scoring 24 points or above were categorized within the highest-scoring group.

- Those scoring between 10 and 23 points were placed within the middle-scoring set.

- Those scoring 9 points or less were categorized within the lowest-scoring group.

The highest-scoring group comprised 7 tools, the middle group 24 tools, and the bottom group 8 tools. Organizing the tools into three sets allowed us to average individual variable scores within each set. This enabled us to compare the major differences among the groups. Table 5 shows which tools fell within each of the groups.

Table 5: Highest, Lowest, and Middle Tool Groups

|

4.a. Ease of Access

Feature and display: Word choice was a major distinguisher of highest-rated tools. Highest-rated tools tended to use the specific word “tool” to distinguish a tool, rather than a general term such as “project” or “resource.” Tool placement on site was another distinguishing feature. Highest-scoring tools were often one click away from the DHC’s home page; bottom tools were two or more clicks away. Visibility of the tools was universally mediocre. Most DHCs included tools in long lists of projects or resources; only a few DHCs featured tools prominently or separately.

Most tools were available for download or equipped to allow upload of users’ data. A few tools allowed for both. However, among those tools that did provide download or upload capability, findability of downloading or uploading set the highest-scoring tools apart. The lowest-ranking tools suffered from difficult-to-find downloading or upload modalities. In a few cases, downloading or uploading was not available even for tools that had been under development for several years.

4.b. Clarity of Use

Clarity of description: While most tools stated their function, clarity and conciseness of the functions set highest-ranking tools apart from lower-rated tools. Similarly, clarity and conciseness of user group statement separated tools. While most tools stated a user group, the clarity and conciseness of that statement set top-rated tools apart. Tools in the highest-scoring group typically provided clear and concise descriptions of user groups that made it easy to infer who would most benefit from using the tool. Most tools stated their function in some form, although few of these definitions were clear or concise. Only three tools did not state their function at all. A slim majority of tools stated their user group in some form. Sixteen out of thirty-five tools did not state a user group.

Clarity of operation: Availability and type of preview was another distinguishing factor. Highest-scoring tools not only made previews available but used sophisticated interactive previews such as demos, rather than static forms such as screenshots. Highest-scoring tools offered support in the form of tutorials, forums, and FAQs, in addition to providing e-mail support. The highest-rated tools also clearly stated technical requirements for using the tool and provided links to any required additional software. Additionally, tools in the top group were more likely to provide cross-platform usability, supporting more than one operating system. Perhaps the most glaring problem was the universal weakness of clarity and conciseness of download instructions or data interaction instructions: 29 of 39 tools offered no instructions for download; 22 of 39 offered no instructions for data interaction.

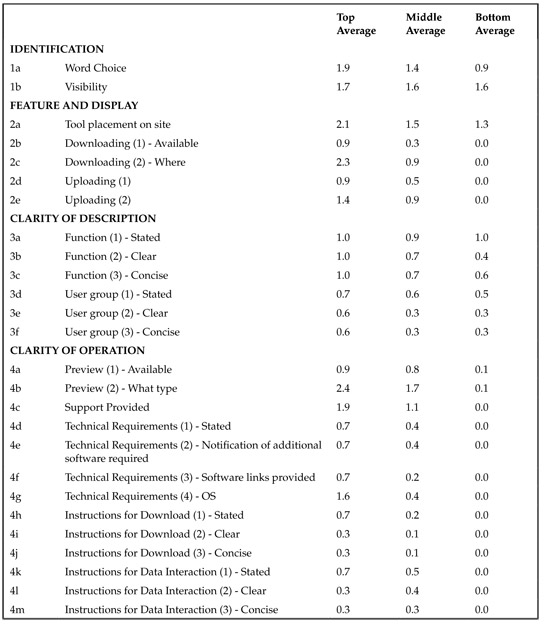

Table 6 provides a breakdown of all the variables among the three tool groups.

Table 6: Ranked Average Score Breakdown

|

4.c. Discussion

Overall, the 39 tools surveyed here performed better on variables measuring ease of access than on variables measuring clarity of use. Most of the DHC sites provided adequate-to-excellent access to tools through appropriate word choices that aid users in identifying tools, and tool placement within the design of the DHC home page that allows users to discover tools while browsing.

However, access to these tools was often impeded by low visibility of, and obscured access to, downloading and uploading features. Clarity of use was a widely problematic dimension of existing tools. Statements summarizing the basic functionality of a tool appeared to be the most frequent technique employed by tool developers to clarify tool use. However, the highest-scoring tools also supplemented these descriptions with (1) detailed statements documenting technical requirements for tool use; (2) sophisticated previews to allow users a sense of the look, feel, and interaction with the tool; and (3) additional support for users in the forms of tutorials, FAQs, manuals, or forums.

We noticed a few problems not captured in our variable scale, but worth mentioning. As we progressed through our evaluation, we came across the phenomenon of orphan tools-tools that are operational but not linked to or referred to by their DHC in any way. MSU’s Media Matrix and four translation tools authored by the Perseus project (five tools total) are not linked from their DHC sponsor’s Web sites.

A number of tools do not feature download or upload capabilities because they are not ready for public consumption. For newer tools (such as MSU’s Interactive Archeological Knowledge System), this is understandable, but some of these tools seem to have been under development for quite some time. Tools such as CITRIS Collaborative Gallery Builder, HASTAC’s Video Annotation System, MSU’s Ink, and Stanford Humanities Lab’s Historinet are not ready for public use though they have been under development for several years.

Finally, we noticed a number of tools that appear to have been abandoned by their creators. Often the code is available for other developers to work on, but there is no further development occurring at the DHC. Examples include the Ancient World Mapping Center’s BATS, Matrix’s Project Pad, and STG Brown’s Virtual Humanities Lab.

5. Recommendations

On the basis of this evaluation, we offer the following recommended best practices for tool design for humanities scholars.

(1) Feature tools. Highlighting your tools using Web design and language draws desired users to the software that a DHC has spent time and effort developing. Best practices for featuring tools include using appropriate word choices. A specific term like tool allows users to find and use relevant software more quickly. Another important measure is featuring the tool on the DHC’s Web site using design techniques, rather than burying it in a bulleted list of projects or resources.

(2) Clarify the tool’s purpose and audience. Users investigating a tool need to know both the intended function of the tool and whether the tool is appropriate for their uses. Clear, concise information about your tool’s purpose and audience will help users make this decision.

(3) Make previews available. The more a user can find out about a tool in advance of downloading or uploading the data, the better. Screenshots, tutorials, and demos can provide users with helpful information regarding the look and feel of your tool.

(4) Provide support. Including an e-mail address for users who have questions is a start, but FAQs and searchable forums are also valuable aids to clarity and successful tool operation.

(5) State technical requirements. Users need to know whether they can download or use a tool with their current technology. State and provide links to any additional software needed to help users make this determination. If your tool needs nothing but a Web browser, say so! Enable use through clear requirements.

(6) Provide clear, easy instructions for download or data interaction. This critical step for clarity of tool use was almost universally lacking in our sample. Without directions, users will have trouble installing your tool, or uploading their data for use with your tool.

(7) Plan for sustainability. Making the tool available after a grant period has run out is a major challenge. During tool creation, plan for how you will make it available to users-and even iterated and improved-after the development period has ended.

6. Lessons Learned and Implications for Future Research

From the beginning of this project, the term tool proved slippery and problematic. Digital humanities center sites featured projects, resources, software, and occasionally tools, but it was difficult to determine the parameters that lead to identification of a tool. This led to a lengthy definition-building process at the beginning of this research. We hope the elements of a tool that we have delineated (objectives, site of development, and associated resources) will introduce precision and enable greater rigor in subsequent research.

This research had several limitations that we recommend be addressed in future projects. Given the scope of the larger project within which this evaluation was embedded, we considered only identification, features, and display of a tool within a DHC site in the notion of findability. This view disregards tool findability from outside of the centers via search engines, browsing, etc., that may more accurately reflect everyday user scenarios. This broader concept of findability bears on several structural issues, including search engine functionality, metadata associated with tools, and DHC site structure. The implications of a broader study of findability is a fertile area that could expand our understanding of the relationship between DHCs and tools, as well as digital tools’ ability to function as viable components of an emerging cyberinfrastructure.

Additional limitations of our evaluation schema became apparent during our analysis of the data. For instance, our evaluation scales favor complex tools that require either uploading of data or downloading of the tool. Simple tools, such as the Web-based Syllabus Finder from GMU, were at a disadvantage in this schema. Though we believe Syllabus Finder to be a very helpful and elegant tool, because it was entirely Web based, it received low downloading and uploading scores. Additionally, it received a low score in clarity of operation, particularly under questions of technical requirements, because there was little need for the sole requirement-a Web browser-to be stated. This particular case suggests that there may be varying models of visibility and usability for Web-based versus downloaded tools. As Web-based applications become increasingly popular, we should reexamine what sorts of documentation and technical specification should be provided.

While our report illustrates a first level of usability for digital humanities tools-access and clarity-we believe there may be an important second level of usability of these tools based upon the field’s objectives for tool-based research. A future research question to pursue may be “How well do existing DHC tools respond to the criteria of, and uses for, ‘tools’?” As our research questions focused primarily on questions of accessibility and clarity, we excluded tool objectives from our current evaluation of digital tools. We suggest that researchers consider these criteria for further evaluation, as they may provide more insight into the quality of existing tools, and into future development needs in the digital humanities.

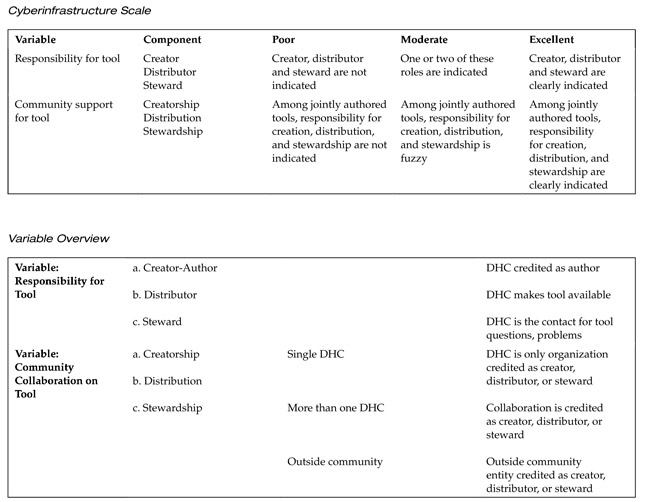

Finally, early in the project, we identified questions of institutional support as a valuable factor in defining use and access. This line of inquiry generated additional variables for consideration and additional scales for analyses; however, after careful consideration we felt this particular question would be beyond the scope of the project and perhaps more useful for a follow-up analysis. Appendix F-1 provides the scales and variables associated with this question for possible future use.

7. Bibliography

1. University of Virginia. 2006. Summit on Digital Tools for the Humanities: Report on Summit Accomplishments. Charlottesville, VA: University of Virginia.

2. Borgman, C. L. 2007. Scholarship in the Digital Age: Information, Infrastructure, and the Internet. Cambridge, MA: MIT Press.

3. American Council of Learned Societies Commission on Cyberinfrastructure for the Humanities and Social Sciences. 2006. Our Cultural Commonwealth. American Council of Learned Societies: Washington, DC.

4. Crane, G., A. Babeu, and D. Bamman. 2007. eScience and the Humanities. International Journal on Digital Libraries 7:117-122.

8. Appendixes

Appendix F-1:

Tools as Cyberinfrastructure, Institutional Support

We believe a future project could evaluate the 39 tools explored here on a scale that represents the nature of the institutional support for the tool. We will use this scale to provide a descriptive account of the types of institutional support that tools have from DHCs. Describing institutional support for the tool includes:

- a DHC’s roles of responsibility for the tool; and

- the level of community collaboration surrounding a tool.

|

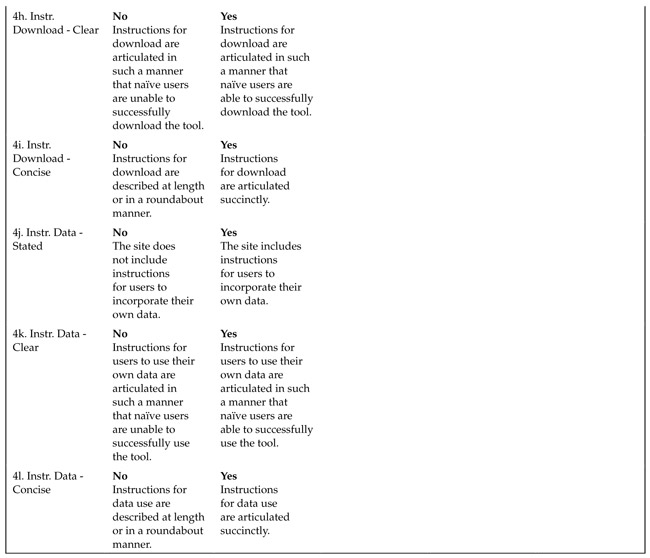

Appendix F-2: Scales and Definitions

|

|

|

|