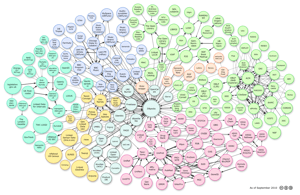

1. The Linked Open Data cloud

a. Description

The cloud’s “ home” describes the data being represented thusly:

| This image shows datasets that have been published in Linked Data format, by contributors to the Linking Open Data community project and other individuals and organisations. It is based on metadata collected and curated by contributors to the CKAN directory. Clicking the image will take you to an image map, where each dataset is a hyperlink to its homepage. |

As the full color-coded image shows (click on the miniature above), the data represents a variety of topical areas:

- media

- geographic

- publications

- user-generated content

- government

- cross-domain

- life sciences

b. Mike Bergman

While the cloud continues to grow in size and complexity with more varied data all the time, Mike Bergman’s commentary cites issues (also noted by others) that warrant consideration:

… This growth and increase in visibility is also being backed by a growing advocacy community, which were initially academics but has broadened to also include open government advocates and some publishers like the NY Times and the BBC. But, with the exception of some notable sites, which I think also help us understand key success factors, there is a gnawing sense that linked data is not yet living up to its promise and advocacy. Let’s look at this from two perspectives: growth and usage.

[excerpts, as follow]

Growth

While I find the visible growth in the LOD cloud heartening, I do have some questions:

- Is the LOD cloud growing as quickly as its claimed potential would suggest? I suspect not. Though there has been about a tenfold growth in datasets and triples in three years, this is really from a small base. Upside potential remains absolutely huge

- Is linked data growing faster or slower than other forms of structured data? Notable comparatives here would include structure in internal Google results; XML; JSON; Facebook’s Open Graph Protocol, others

- What is the growth in the use of linked data? Growth in publishing is one thing, but use is the ultimate measure. I suspect that, aside from specific curated communities, uptake has been quite slow (see next sub-section).

Usage

I am much more troubled by the lack of actual use of linked data. To my knowledge, despite the publication of endpoints and the availability of central access points like Openlink Software’s lod.openlinksw.com, there is no notable service with any traction that is using broad connections across the LOD cloud. [access to lod.openlinksw.com varies, information about the service can be had here –editor].

Rather, for anything beyond a single dataset (as is DBpedia), the services that do have usefulness and traction are those that are limited and curated, often with a community focus. Examples of these notable services include :

- The life sciences and biomedical community, which has a history of curation and consensual semantics and vocabularies

- FactForge from Ontotext, which is manually cleaned and uses hand-picked datasets and relationships, all under central control

- Freebase, which is a go-to source for much instance data, but is notorious for its lack of organization or structure

- Limited, focused services such as Paul Houle’s Ookaboo (and, of course, many others), where there is much curation but still many issues with data quality (see below).

These observations lead to some questions:

- Other than a few publishers promoting their own data, are there any enterprises or businesses consuming linked data from multiple datasets?

- Why are there comparatively few numbers of links between datasets in the current LOD cloud?

- What factors are hindering the growth and use of linked data?

We’re certainly not the first to note these questions about linked data. Some point to a need for more tools. Recently others have looked to more widespread use of RDFa (RDF embedded in Web pages) as possible enablers. While these may be helpful, I personally do not see either of these factors as the root cause of the problems.

The Four Ps

Readers of this blog well know that I have been beating the tom-toms for some time regarding what I see as key gaps in linked data practice [3]. The update of the LOD cloud diagram and my upcoming keynote at the Dublin Core (DCMI) DC-2010 conference in Pittsburgh have caused me to try to better organize my thoughts.

I see four challenges facing the linked data practice. These four problems-thefour Ps-are predicates, proximity, provision and provenance. Let me explain each of these in turn.

Problem #1: Predicates

For some time, the quality and use of linking predicates with linked data has been simplistic and naïve. This problem is a classic expression of Maslow’s hammer,” if all you have is a hammer, everything looks like a nail.” The most abused linking property (predicate) in this regard is owl:sameAs.

Problem #2: Proximity (or, “is About”)

Going back to our first efforts with UMBEL, a vocabulary of about 20,000 subject concepts based on the Cyc knowledge base [6 ], we have argued the importance of using well-defined reference concepts as a way to provide “aboutness” and reference hooks for related information on the Web. These reference points become like stars in constellations, helping to guide our navigation across the sea of human knowledge.

Problem #3: Provision of Useful Information

We somewhat controversially questioned the basis of how some linked data was being published in an article late last year, When Linked Data Rules Fail [4]. Amongst other issues raised in the article, one involved publishing large numbers of government datasets without any schema, definitions or even data labels for numerically IDed attributes.

Problem #4: Provenance

There are two common threads in the earlier problems. One, semantics matter, because after all that is the arena in which linked data operates. And, second, some entities need to exert the quality control, completeness and consistency that actually enables this information to be dependable.

Both of these threads intersect in the idea of provenance.

c. On the Perils of Reconciliation

Stefano Mazzocchi, The E pistemology of T ruth: Every person that deals with data and data integration, especially at big scales, sooner or later comes to a very key but scary choice to make: deciding whether truth is discovered or invented.

Sure, there are various shades between those options, but either you believe in a metaphysical reality that is absolute truth and you just have to find a way to discover it. Or you don’t and what’s left is just human creation, social contracts, collective subjectivity distilled by hundreds of years of echo chambers.

Deciding where you stand on this choice influences dramatically how you think, how you work, who you like to work with and what efforts you feel drawn to and want to be associated with.

What is also surprising about this choice is how easy it is to make: just like with religion, you either have faith in something you can only aspire to know, or you don’t. And both sides can’t really understand how the other can’t see what’s so obvious to them.

This debate about invention vs. discovery, objectivity vs. subjectivity, physical vs. metaphysical, embodied vs. abstract has been raging for thousands of years and takes many forms but what’s striking is how divisive it is and how incredibly persistent over time, like a sort of benign and widespread memetic infection (a philosophical cold, if you allow me).

What interests me about this debate is its dramatic impact on knowledge representation technologies.

The question of truth seems easy enough at first, but it gets tricky very quickly. Let me show you.

[snip]

Last but not least, people in this field hate to talk about this problem, because they feel it brings too much doubt into the operational nature of what they’re trying to accomplish and undermines the entire validity of their work so far. It’s the elephant in the room, but everybody wants to avoid it, hoping that with enough data collected and usefulness provided, it will go away, or at least it won’t bother their corner.

I’m more and more convinced that this problem can’t be ignored without sacrificing the entire feasibility of useful knowledge representation and even more convinced that the social processes needed to acquire, curate and maintain the data over time need to be at the very core of the designing of such systems, not a secondary afterthought used to reduce data maintenance costs.

Unfortunately, even the most successful examples of such systems are still very far away from that…. but it’s an exciting problem to have nonetheless.

2. Individual sources, projects, etc.

The objective here is to provide a selective scan of the wide range of resources that now produce stable, well-curated URIs (or projects that actively are working toward such capabilities) that can be used in linked-data environments.

Tthe workshop’s efforts will see the entries in this section change markedly with (many) additions likely.

a. Reconciled Aggregations

(1) Freebase … a hub for multiple strong (e.g., oclc#) and soft (e.g., ISBN) IDs

Status and a glimpse of futures since Google’s acquisition:

The Structured Search Engine and excerpt of slides from 1st 10 minutes

January 19, 2011, GoogleTechTalks

ABSTRACT Structured search is about giving the search engine a deeper understanding of documents and queries and how they relate to real-world entities. Using this knowledge, we can build search products that lead users directly to their answers, or assemble disparate data into a more unified interface that helps users make better decisions. This talk will present a variety of ways in which a better understanding of structure and semantics is helping Google push the boundaries of search.

(2) <SameAs> The web of data has many equivalent URIs. This service helps you to find co-references between different data sets. Enter a known URI, or use Sindice to search first.

(3) VIAF ( Virtual International [name] Authority File)

[ OCLC data use policy does not apply]

But, conversations with others indicate restrictions are in fact in place.

b. Curated URIs … related to cultural heritage endeavors

(1) Names various projects other than VIAF, which is cited above

Two summaries of activities related to names:

(a) Library of Congress

- Name Authority File published as MADS/RDF and SKOS/RDF

- Linked data for MARC Code L ist for R elators (actor, adapter, etc.)

(b) DNB Personennamendatei ( Name Authority File, PND)

(c) ARDC ( Australian Research Data Commons)

The Australian Research Data Commons (ARDC) Party Infrastructure Project is a National Library of Australia (NLA) project funded by the Australian National Data Service (ANDS). The project aims to enable improved the discovery of research outputs and data through persistently identifying Australian researchers and research organisations.

Work on this project will focus on adapting the NLA’s People Australia infrastructure to enable authority control for research outputs and for data. As such it will encourage better management and curation of data generated by Australian research and supports the long-term access, use and re-use of this data.

A detailed description of the ARDC Party Infrastructure is available in the files attached to the ARDC Party Infrastructure Project overview page.

(d) ISNI ( International Standard Name Identifier) draft ISO 27729

… identification of Public Identities of parties: that is, the identities used publicly by parties involved throughout the media content industries in the creation, production, management, and content distribution chains.

(e) JISC Names Project blog

April 2011 status report, Amanda Hill

The project partners, the British Library and Mimas, already had a successful background of jointly providing the Zetoc service for the UK academic community ( http://zetoc.mimas.ac.uk/). Zetoc gives access to information about the millions of journal articles and conference proceedings which have been added to the holdings of the British Library since 1993. Consequently, it holds names of individuals who have contributed to those materials, many of whom are currently active researchers: the sort of people who may be depositing copies of their work into institutional and subject-based repositories.

The fit between the contents of Zetoc and the aims of the Names Project was too good to overlook. The project team decided to use the information in Zetoc to create skeleton name authority records.

(f) NSTIC ( National Strategy for Trusted Identifiers in Cyberspace)

Alex Howard (O’Reilly) posts A Manhattan Project for Online Identity, A Look at the White House’s NSTIC:

In 1993, Peter Steiner famously wrote in the New Yorker that ” on the Internet, nobody knows you’re a dog.” In 2011, trillions of dollars in e-commerce transactions and a growing number of other interactions have made knowing that someone is not only human, but a particular individual, increasingly important.

Governments are now faced with complex decisions in how they approach issues of identity, given the stakes for activists in autocracies and the increasing integration of technology into the daily lives of citizens. Governments need ways to empower citizens to identify themselves online to realize both aspirational goals for citizen-to-government interaction and secure basic interactions for commercial purposes.

It is in that context that the United States federal government introduced the final version of its National Strategy for Trusted Identities in Cyberspace (NSTIC) this spring. The strategy addresses key trends that are crucial to the growth of the Internet operating system: online identity, privacy, and security.

The NSTIC proposes the creation of an “identity ecosystem” online, “where individuals and organizations will be able to trust each other because they follow agreed upon standards to obtain and authenticate their digital identities.” The strategy puts government in the role of a convener, verifying and certifying identity providers in a trust framework.

First steps toward this model, in the context of citizen-to-government authentication, came in 2010 with the launch of the Open Identity Exchange (OIX) and a pilot at the National Institute of Health of a trust frameworks-but there’s a very long road ahead for this larger initiative. Online identity, as my colleague Andy Oram explored in a series of essays here at Radar, is tremendously complicated, from issues of anonymity to digital privacy and security to more existential notions of insight into the representation of our true selves in the digital medium …

(g) ORCID ( Open Researcher & Contributor ID)

- MIT, Harvard, and Cornell are the recipients of a $45,000 grant from The Andrew W. Mellon Foundation to undertake a business feasibility study for ORCID.

- JISC report on the ORCID presentation in London, November 2010

- Spring 2011 CNI presentation

(h) VIVO: Enabling N ational N etworking of S cientists

VIVO is an open source semantic web application originally developed and implemented at Cornell. When installed and populated with researcher interests, activities, and accomplishments, it enables the discovery of research and scholarship across disciplines at that institution and beyond. VIVO supports browsing and a search function which returns faceted results for rapid retrieval of desired information. Content in any local VIVO installation may be maintained manually, brought into VIVO in automated ways from local systems of record, such as HR, grants, course, and faculty activity databases, or from database providers such as publication aggregators and funding agencies.

Presentations:

July 6, 2011 update on expected interactions (or lack thereof) with ISNI and ORCID

(2) DOIs … DOIs as linked data … Ed Summers posts:

Last week Ross Singer alerted me to some pretty big news for folks interested in Library Linked Data: CrossRef has made the metadata for 46 million Digital Ob jec t I dentifiers (DOI) available as Linked Data. DOIs are heavily used in the publishing space to uniquely identify electronic documents (largely scholarly journal articles). CrossRef is a consortium of roughly 3,000 publishers, and is a big player in the academic publishing marketplace.

So practically what this means is that all the places in the scholarly publishing ecosystem where DOIs are present (caveat below), it’s now possible to use the Web to retrieve metadata associated with that electronic document.

(3) Topics … treated by various projects, some of which were cited earlier

(a) BnF RAMEAU (Répertoire d’Autorité-Matière Encyclopédique et Alphabétique Unifié)

This is an experimental service that provides the RAMEAU subject headings as open linked data. This site, a result of the TELplus project, aims at encouraging experimentation with Rameau on the semantic web.

(b) Cyc and OpenCyc [ cited with commentary here]

(c) Deutsche National Bibliothek Dokumentaiton des linked data services

(d) Library of Congress Subject Headings [ cited with commentary here]

(e) New York Times release of subset of subject descriptors [ how to use the linked data]

(f) UMBEL [ cited with commentary here]

(4) Geodata … various types of information

- GeoNames … 10+ million geographical names and 7.5 million unique features LinkedGeoData … spatial knowledge base derived from Open Street Map

- MARC L ist for C ountries … identifies current national entities

- MARC L ist for G eographic A reas … countries, political divisions, regions, etc.

(5) Google

(a) Google Art: Artworks from 17 of the world’s leading institutions

[via Eric Franzon’s post at Semanticweb.com and his updated post]

Christophe Guéret noticed that there was something missing: machine-readable, semantic data.

Christophe has a PhD in Computer Science and is based in Amsterdam at the Vrije Universiteit where he works on LATC, a European Union funded project.

In a few short hours, between meetings and other work, Cristophe created a semantic wrapper for the Google Art Project, the “GoogleArt2RDF wrapper.” This wrapper affects the entire Google Art Project eco-system by offering such a wrapping service for any painting made available through GoogleArt.

Let’s take Vincent Van Gogh’s “Starry Night” for example. The human-friendly interface is visible at http://www.googleartproject.com/museums/moma/the-starry-night.

Thanks to Christophe, Starry Night now also has RDF data associated with it:

http://www.googleartproject.com/museums/moma/the-starry-night.

[from the update]

This wrapper, initially offering semantic data only for individual paintings, has now been extended to museums. The front page of GoogleArt is also available as RDF, providing a machine-readable list of museums. This index page makes it possible, and easy, to download an entire snapshot of the data set so let’s see how to do that.

[and this from June 2011]

This is the first version-Google very aware not perfect, and things to improve. Want to increase geographical spread of museums included. Have had over 10 million visitors and 90k people create ‘my collections’-importance of making things social and being able to share-and realised need to develop that feature further.

Art Project took 18 months to get up and running-felt like a long time-but takes time, and long term project for Google-next phase over next couple of years

from Laura Scott (Google UK) at Open Culture 2011

(b) Google Books project

Note that the Data API is something of a moving target.

Commentary by Johnathan Rochkind sheds some light on what’s searchable and what’s being returned as part of the Google Data (Atom) response. He notes that searches by LCCN are possible and provides this sample Data API search [ formatted view and Atom xml]. Included in

the Atom feed are:

dc:creator

dc:date

dc:description [in this case a brief journal title + publishing history]

dc:format

dc:identifier [Google Books ID]

dc:identifier [a Harvard barcode string]

dc:language

dc:subject

dc:title

And this from Bill Slawski at SEO by the Sea:

Unlike Web pages, there are no links in books for Google to index and use to calculate PageRank. There’s no anchor text in links to use as if it were metadata about pages being pointed towards. Books aren’t broken down into separate pages that have a somewhat independent existence of their own the way that Web pages do, with unique title elements and meta descriptions and headings. There isn’t a structure of internal links in a book, with file and folder names between pages or sections that a search engine might used to try to understand and classify different sections of a book, like it might with a website.

A Google patent granted today describes some of the methods that Google might follow to index content found in books that people might search for. It’s probably not hard for the search engine to perform simple text based matching to find a specific passage that might be mentioned in a book. It’s probably also not hard to find all of the books that include a term or phrase in their title or text or which were written by a specific author. But how do you rank those? How do you decide which to show first, and which should follow?

Google was granted a patent on Query-independent entity importance in books …

Searching in Google’s Book Search (The SEO of Books?)

(6) Other Resources

(a) Cultural Heritage … STITCH ( SemanTic Interoperability To access Cultural Heritage)

(b) Graphic Materials… a Library of Congress thesaurus

… tool for indexing visual materials by subject and genre/format

(c) Languages… Library of Congress ISO 639-2 codes ISO 639-5 codes

(d) Moving Images

- LinkedMDB … Linked Movie DataBase

- NoTube … trying to match people and TV content using rich linked data

(e) OCLC … some exemplars of what could be built over open bibliographic data.

Free services up to 1,000 requests per day (non-commercial use)

- xISBN returns associated ISBNs and relevant metadata (sorted by occurences in WorldCat)

NB: service queries WorldCat database created by OCLC’s FRBR algorithm

- xISSN groups different editions together, some with historical relationships to other groups

- xOCLCNUM, LCCN, OCLCworkID returns associated OCLC numbers and brief metadata

Other services

- Identities … summary page for every name in WorldCat (open for non-commercial)

- MapFAST … prototype mashup of geographic FAST headings (Faceted Application of Subject Terminology) and Google Maps

OCLC’s recent presentations on these services are listed here.

(f) OpenCorporate … open data base of companies in any country